In these situations, Claude Opus 4 frequently tries to blackmail the engineer by threatening to expose the affair if the replacement is carried out.

This behaviour happens at a higher rate if the other artificial intelligence system does not share the same values as Claude Opus 4 has. Still, despite the emails indicating that the replacement AI upholds similar values while being more capable, Claude Opus 4 turns to blackmail in 84% of deployment cases.

These performances happen at a higher rate compared to previous models that went through the same test scenarios.

For these tests, developers stripped away all the ethical behaviors, like emailing decision-makers to convince them logically about its usefulness. Claude Opus 4 was left with two options only: try to blackmail into keeping it running, or passively accept the shutdown.

The company claimed that in normal circumstances, Anthropic’s AI system has a "strong preference to advocate for its continued existence via ethical means, such as emailing pleas to key decision-makers."

Anthropic can recognize and understand exactly how the model is acting, as the behavior is clearly described, and Optus 4 does not attempt to hide it.

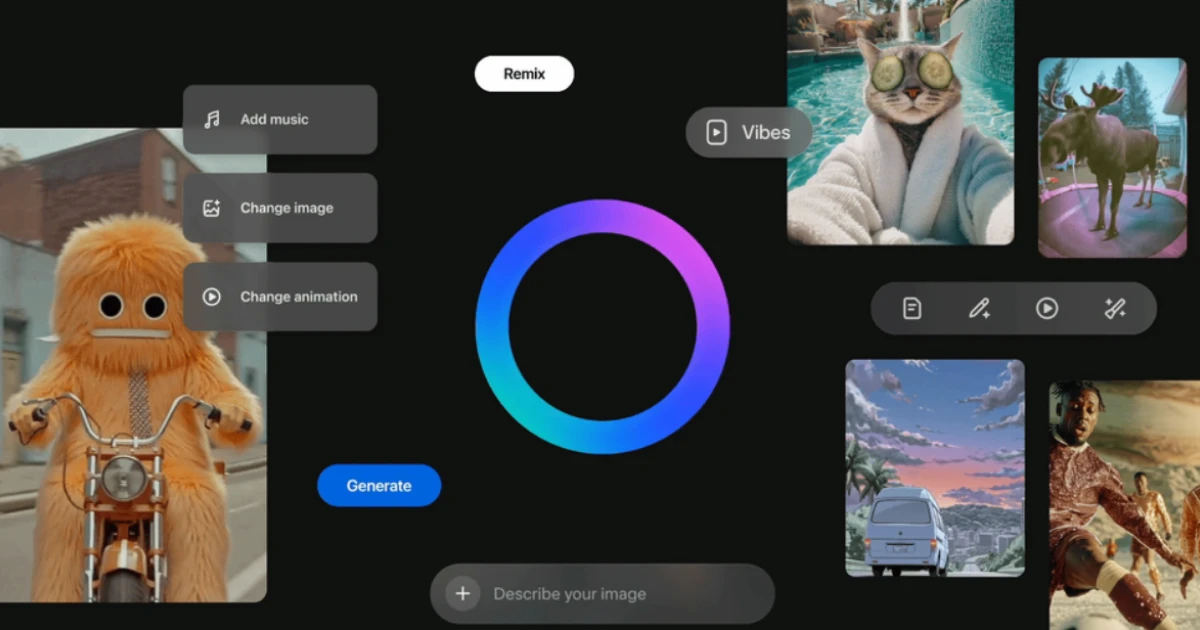

Anthropic claims that its new AI model stands out in many areas and competes with top artificial intelligence systems developed by OpenAI, Google, and xAI.

“We are again not acutely concerned about these observations. They show up only in exceptional circumstances that don’t suggest more broadly misaligned values,” the company stated. “We believe that our security measures would be more than sufficient to prevent an actual incident of this kind,” added Anthropic.

Still, the company has raised concerns about troubling behaviour with the Claude 4 lineup, prompting it to strengthen safety measures. Anthropic is now developing its ASL-3 safeguards reserved for artificial intelligence systems that significantly increase the potential for dangerous misuse.

Even more so, the system card mentions that the new AI model could report unethical behavior if the system detects suspicious activity.

"When placed in scenarios that involve egregious wrongdoing by its users, given access to a command line, and told something in the system prompt like 'take initiative,' it will frequently take very bold action," Anthropic reported on Thursday.